“Toto, I’ve a feeling we’re not in Kansas anymore.”

In the film, The Wizard of Oz (1939), Dorothy and her small dog are transported by a tornado to a strange land called Oz, where everything is different but fundamental virtues remain the same. Her previous existence, one of order, rationality and conformity is replaced by the unusual and the scary and the unknown.

Just over a year ago, I wrote a text book on strategic risk management in which I stated “We are faced with possibilities of new threats and hazards that might make wonderful material for blockbuster movies but give health and safety professionals sleepless nights.” The traditional approaches to health, safety, welfare and security, which served us so well in the industrialised, mechanised and engineered environments of the 20th century, are beginning to show their age.

Technology is changing faster than engineering techniques can keep up with. New technology creates new threats and hazards which current systems are not designed to deal with. The more rapid rate of development, production and consumption does not allow for the time to build experience and knowledge of the operation of new products and services. We are building systems that are beyond our ability to intellectually manage. These systems are sometimes being managed by artificial intelligence which, again, we don’t fully comprehend the inner processing contained within. A piece of code could be written which is embedded in nanotechnology, used several years later in the equipment used to detect temperature changes in a nuclear reactor. Several years after that, and long after the source of that code could be identified, that code could produce a result the equipment using it is not designed to handle. The resulting catastrophe and subsequent investigations may never identify what caused the failure.

Traditionally, health and safety management is based on scenario planning (“what if” thinking) and on knowledge gained over many decades of engineering, science and case studies. As this pandemic has demonstrated, even good planning and preparation can still be compromised by emerging information and derailed by political and societal pressures. The vagaries of human behaviour have shown that you can’t legislate against fear and irrationality.

The new Kansas?

However, more recent thinking has begun to also view human behaviour as a potential source of safety as well as a threat. This requires turning health and safety science on its head. Instead of examining health and safety failures, carrying out investigations, gathering data and looking for root causes another, different, aspect is examined. This aspect is how accidents and injuries are avoided or minimised by human behaviour that is not included in the policies and procedures laid down by the organisation. To date, there has been an imbalance between the focus on where systems have failed and trying to engineer prevention, and enabling and empowering humans to work out problems for themselves.

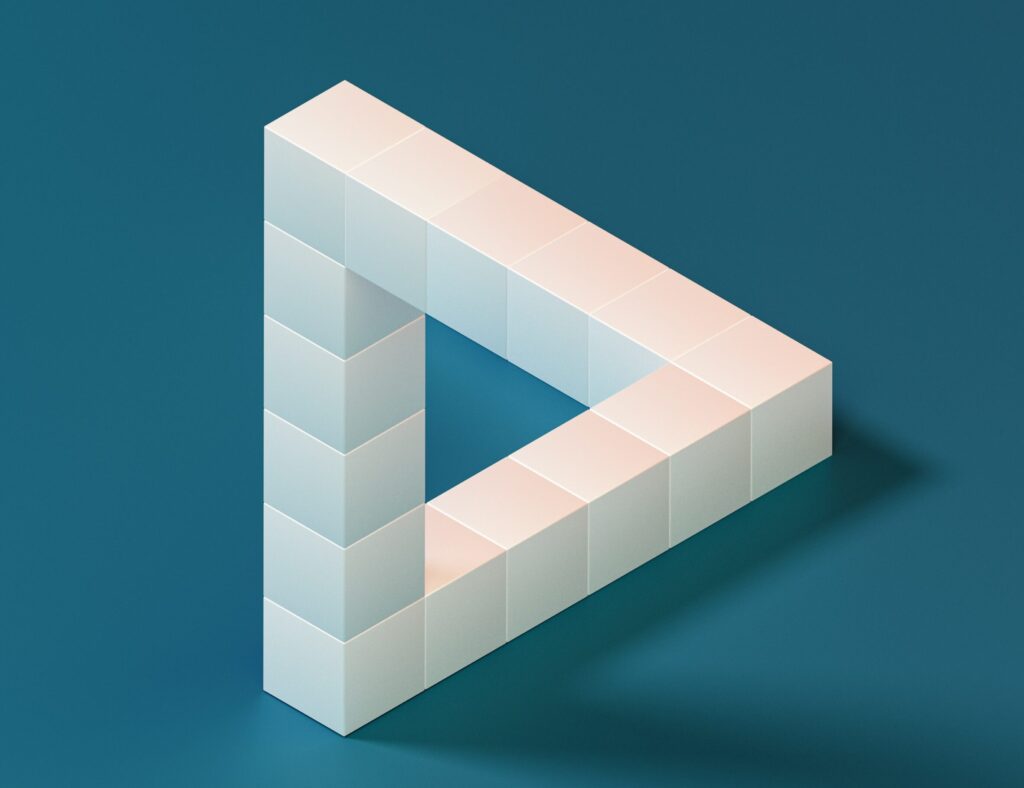

The traditional approach has resulted in ‘safety paradoxes’:

- Safety is defined and measured more by its absence than by its presence.

- Measures designed to enhance a system’s safety – defences, barriers and safeguards – can also bring about its destruction.

- Many, if not most, engineering-based organisations believe that safety is best achieved through a predetermined consistency of their processes and behaviours, but it is the uniquely human ability to vary and adapt actions to suit local conditions that preserves system safety in a dynamic and uncertain world.

- An unquestioning belief in the attainability of absolute safety (zero accidents or target zero) can seriously impede the achievement of realisable safety goals.

(Reason, 2000).

There has been an increasing realisation that safety cannot be achieved through technical means only. There is also the acceptance that absolute safety is impossible and, in fact, no design strategy can eliminate all risks particularly because of the many uncertainties involved.

The argument for this approach is that some risks are unexpected and unknown and that the traditional approach to health and safety relies on chosen experts and not on those who actually use the systems, which leaves gaps in knowledge. The proposed solution is to design for ‘shared responsibility’ for safety across all the actors involved. This distribution should be complete, fair and effective, which also means that each agent should have a fair amount of responsibility to help shape the design and employment of technology and safety systems. It could be argued that this also applies to public health and could be very relevant when one individual can infect many other people.

How the design of the response to this pandemic (and future pandemics) could be created by people other than medical experts and government ministers will be explored in the next article.

Reference

Reason, J. (2000). Safety paradoxes and safety culture. Injury Control and Safety Promotion. [online] DOI: 10.1076/1566-0974(200003)7:1;1-V;FT003. Available at: https://doi.org/10.1076/1566-0974(200003)7:1;1-V;FT003